Generative artificial intelligence can save educators time, help personalize learning, and potentially close achievement gaps. That’s what artificial intelligence experts have said when they talk about the technology’s potential to transform education.

But not everyone agrees. Open-ended responses to an EdWeek Research Center survey conducted in the spring showed that many educators are skeptical of the technology.

The reality is that AI has its limits. For instance, it could produce inaccurate or biased responses based on faulty data it draws from. Many districts and states have created or are in the process of creating guidelines for how to incorporate AI into education, taking into account that it’s not a perfect tool. The Los Angeles school district has even become a cautionary tale for what not to do in harnessing AI for K-12 education.

Benjamin Riley, the founder and CEO of think tank Cognitive Resonance, argues that schools don’t have to give in to the hype just because the technology exists. (Riley was previously the founder of Deans for Impact, a nonprofit working to improve teacher training.)

Cognitive Resonance on Aug. 7 released its first report titled “Education Hazards of Generative AI.”

In a phone interview with Education Week, Riley discussed the report and his concerns about using AI in education.

The interview has been edited for brevity and clarity.

Could you explain why you don’t think it’s accurate to say AI is the most important technological development in human history?

It’s good to come back to what a large language model really is. It’s a prediction device. It takes text that you type in and it predicts what text it should put back out to you. The way it does that is purely by statistical correlations between associations of words. What’s remarkable about it as a technology is that process and that ability that we have now feels superconversational. It feels like you can talk to it about anything and, in a sense, you can. There’s no limit to the range of things it can say back to you in response to text you put into it. But that’s all it can do.

It’s not thinking. It’s not reasoning. It’s not capable of understanding that you have a mind and a life and ideas, you might have misconceptions. It doesn’t understand or have any capacity to do any of that. The computer was a huge deal. Will AI sit alongside the computer as a development in our society? I don’t know, but I certainly don’t think we’re at a point right now that the tool, as it exists today, is even in the conversation of things like fire or the printing press in terms of social impact.

What’s your biggest concern about AI use in education?

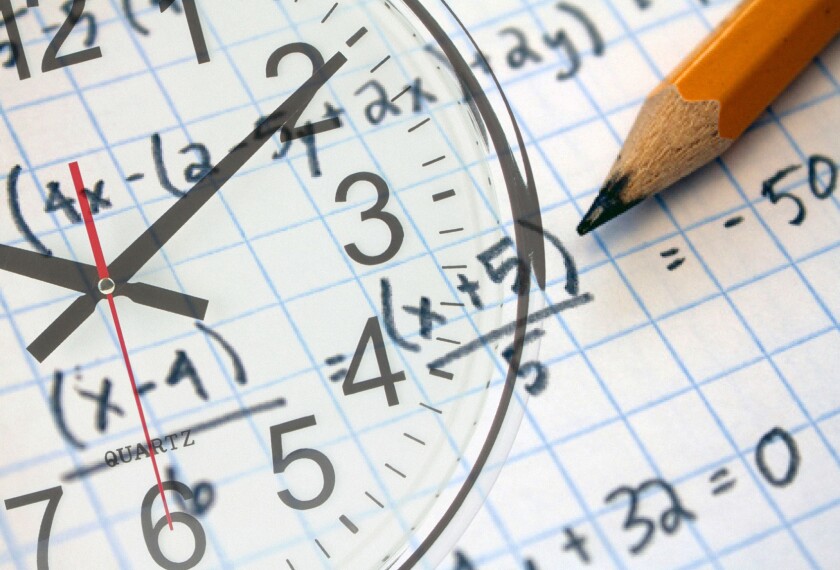

Top of my list is using [AI tools] to tutor children. They’re absolutely not going to be effective at that and they might actually be harmful at that. We’re already starting to get some empirical evidence of this. Some researchers at Wharton published a study recently of a randomized control trial where high school math students using ChatGPT learned less than their peers who had no access to it during the time of the study. That’s completely predictable because what the tool does is automate cognition for you. The fact that when you lean on it to do your work, it means you’re doing less effortful thinking, which means you’re learning less.

One of the things that’s particularly frustrating me about the education hype around AI right now is that, in other dimensions, we’re starting to see how technology has had real harms on social cohesion and solidarity. Our politics are polarized. Democracy is at risk in many countries. All of these things, I think, are interconnected. We’re starting to be dimly aware of that, and yet, here comes a tool that just throws more fuel onto the technological fire of society. People really need to stop and think about this. The thing that frustrates me the most is people are just saying, ‘Oh, it’s inevitable, so we have to get on board.’ It absolutely is not. We can make choices.

People say AI is the future, that students will use it in their careers, so we need to teach them how to use it. Do you agree with that?

There are a couple of ways to think about that. How much of the school system right now is oriented to teach kids how to use a computer? Is that really something that we spend a ton of time in our education system sort of focusing on? I would say that no, we don’t. Do kids use computers in school? Yes. Is there a computer lab that they might be accessing? Sure. Are there computer science classes? Yeah, but only a few take them. This technology just becomes part of the fabric of our existence. Something that is undeniably huge as a technological development, which is the creation of a computer, hasn’t really changed a lot of our educational practices. So I’m not sure why we need to assume that AI should be treated differently.

Is there anything you think schools can use generative AI for?

Once you understand the limitations of generative AI, you can make use of it in ways that mitigate the hazards and the harms that may result. Once you understand that it’s just a statistical, next-word prediction engine, you don’t treat the guidance that it gives you as sacrosanct or some knowledgeable search engine that thought deeply about what you were asking and came back with the best answer.

So a teacher says, “Give me a lesson plan for this Friday, 60 minutes about this topic.” There might still be some stuff in that [answer] that has problems, but the teacher has their intelligence. They have their know-how and experience, and they can look at that lesson plan and then decide how much of it they want to use or not. That’s fine. I don’t have a problem with that.

The other area that I know has been positive and makes sense given the nature of the technology is creating materials for English learners. That’s playing to the strong suit of these models. They’re language models. So if what you need is translation between languages, they’re pretty good at that.

Many experts are calling for AI literacy in schools. Is that something you think is important?

It depends what you mean by AI literacy. What there is a lot of right now are efforts that purport to be about AI literacy, that are essentially trying to teach you how to use the tool, how to write a good prompt. That’s not what I mean by AI literacy.

I want people to be critical thinkers about this technology. [At the] operational level: how it does what it does and why you cannot trust it to say things that we humans would consider true. There are so many ethical issues: the environmental costs it takes to produce it, the ways in which it replicates bias that exists in society.

I want to [emphasize] having a better understanding of how we humans think and learn from each other, elevating that, and making efforts to foster AI literacy.

Disclaimer: The copyright of this article belongs to the original author. Reposting this article is solely for the purpose of information dissemination and does not constitute any investment advice. If there is any infringement, please contact us immediately. We will make corrections or deletions as necessary. Thank you.